No application would be complete (or even functional in many cases) without being able to draw stuff on the screen. The MHP specification uses a mixture of a cut-down version of AWT and a GUI API from the HAVi specification.

The graphics model is probably one of the most complex parts of MHP, and for good reason: there is a lot of different issues that need to be addressed when you are talking about graphics in a TV environment. Here are just some of the things that you typically have to consider:

- Pixel aspect ratios. Video and TV applications typically use non-square pixels, while computer graphics APIs usually assume that pixels are square. Combining these two models can make things very complex for developers and designers. To make it worse, in some receivers they may not be combined, so an application trying to produce perfect overlays of graphics on video could be in for a very hard time

- Aspect ratio changes. As if the previous point wasn’t enough, the aspect ratio of a TV signal may change from the standard 4:3 to widescreen (16:9) or even 14:9. This could be changed by the TV signal itself, or by the user. In either case the effect on graphics and images not designed for that aspect ratio can be pretty unpleasant from an application developer’s point of view. It doesn’t look good when all circles in an application stop being circular, for instance.

- Translucency and transparency. How can we make graphics transparent so that the viewer can see what’s underneath them?

- Colour space issues – how do we map the RGB colour space used by Java on to the YUV colour space used by TV signals?

- No window manager. Window managers are too complex to be used on many MHP receivers, so an application needs some other way of getting an area it can draw on. This also means that an application will have to coexist with other applications in a way that’s different from standard Java or PC applications.

- User interface differences – an application may not have input focus if another application is active. The application may also need a new user interface metaphor, if the user only has a TV remote to interact with it.

Given all these issues, it’s probably not surprising that there are a lot of graphics related issues that you need to think about as a developer. Many of the lessons learned from developing AWT applications will still apply, however, although typically you won’t want the standard PC-like look and feel for any applications. There are a few differences caused by the constraints of a TV environment, but most of the time anyone who can develop an AWT application will be happy working with the graphics APIs in MHP.

In general, MHP uses a subset of the personalJava AWT classes, without any of the heavyweight GUI classes that depend on an underlying windowing system. Most of the differences come from the extensions that MHP has added. The HAVi (Home Audio Video Interoperability) standard defines a set of Java GUI extensions known as the HAVi Level 2 GUI which include a new widget set that does not require a windowing system and a set of classes for managing scarce resources and allowing applications to share the screen when there is no window manager. DVB has adopted these extensions for MHP, because they fulfill almost all of the requirements that MHP had and because using them saved DVB from having to define its own extensions.

These extensions are contained in the org.havi.ui package, and are related to several areas of graphics operations. The first of these, and the easiest to understand, is a set of extra GUI widgets that are designed for use on a TV screen, both to fit TV-specific limitations and requirements, and to provide a TV-specific interaction model. These classes (such as org.havi.ui.HComponent and org.havi.ui.HContainer should be used in place of their AWT equivalents.

We will examine several elements of the org.havi.ui package in the rest of this section, but before we can do that, we have to understand the graphics model in an MHP receiver.

Screen Devices and the MHP display model

An interactive TV display can typically be split into three layers. The background layer is usually only capable of displaying a single colour, or if you’re lucky a still image. On top of that, there is the video layer. As its name suggests, this is the layer where video is shown. Video decoders in set-top boxes usually have a fairly limited feature set, and may only display video at full-screen and quarter-screen resolutions and a limited set of coordinates.

On top of that, there is the graphics layer. This is the layer that graphics operations in MHP will actually draw to. This graphics layer may have a different resolution from the video or background layers, and may even have a different shape for the pixels (video pixels are typically rectangular, graphics pixels are typically square). Given the nature of a typical set-top box, you can’t expect anything too fancy from this graphics layer – 256 colours at something approaching 320×200 may be the best that an application developer can expect.

Layers in the MHP graphics subsystem.

All of these layers may be configured separately, since there are quite possibly different components in the system responsible for generating all of these layers. As you can imagine, this is potentially a major source of headaches for MHP graphics developers. To complicate it further, while the layers can be configured separately, there is some interaction, and configuring one layer in a specific way may impose constraints on the way the other layers can be configured.

HAVi (and MHP) defines an HScreen class to help solve this problem. This represents a physical display device, and every MHP receiver will have one HScreen instance for every physical display device that’s connected to it – typically this will only be one. Every HScreen has of a number of HScreenDevice objects. These represent the various layers in the display. Typically, every HScreen will have one each of the following HScreenDevice subclasses:

HBackgroundDevice, representing the background layerHVideoDevice, representing the video layerHGraphicsDevice, representing the graphics layer

As we can see from the HScreen class definition below, we can get references to these devices by calling the appropriate methods on the HScreen object:

public class HScreen

{

public static HScreen[] getHScreens();

public static HScreen getDefaultHScreen();

public HVideoDevice[] getHVideoDevices();

public HGraphicsDevice[] getHGraphicsDevices();

public HVideoDevice getDefaultHVideoDevice();

public HGraphicsDevice getDefaultHGraphicsDevice();

public HBackgroundDevice getDefaultHBackgroundDevice();

public HScreenConfiguration[]

getCoherentScreenConfigurations();

public boolean setCoherentScreenConfigurations(

HScreenConfiguration[] hsca);

}

We can get access to the default devices, but in the case of the video and graphics devices, there may also be other devices that we can access.

Obviously we can only have one background, but features like picture-in-picture allow multiple video devices, and if multiple graphics layers are supported, we can have a graphics device for each layer. This is shown in the diagram below.

The devices that make up an MHP display.

Configuring screen devices

Once we have a device, we can configure it using instances of the HScreenConfiguration class and its subclasses. Using these, we can set the pixel aspect ratio, screen aspect ratio, screen resolution and other parameters that we may wish to change. These configurations are set using subclasses of the HScreenConfigTemplate class. An HScreenConfigTemplate provides a mechanism that lets us set the parameters we would like for a given screen device, and we can then query whether this configuration is possible given the configuration of the other screen devices.

Let’s examine how this works, taking the graphics device as an example. The HGraphicsDevice class has the following interface:

public class HGraphicsDevice extends HScreenDevice {

public HGraphicsConfiguration[]

getConfigurations();

public HGraphicsConfiguration

getDefaultConfiguration();

public HGraphicsConfiguration

getCurrentConfiguration();

public boolean setGraphicsConfiguration(

HGraphicsConfiguration hgc)

throws SecurityException,

HPermissionDeniedException,

HConfigurationException;

public HGraphicsConfiguration getBestConfiguration(

HGraphicsConfigTemplate hgct);

public HGraphicsConfiguration getBestConfiguration(

HGraphicsConfigTemplate hgcta[]);

}

The first three methods are pretty obvious – these let us get a list of possible configurations, the default configuration and the current configuration. The next method is equally obvious, and lets us set the configuration of the device. The only thing to note here is the HConfigurationException that may get thrown if an application tries to set a configuration that’s not compatible with the configurations of other screen devices.

The last two methods are the most interesting ones to us. The getBestConfiguration() method takes a HGraphicsConfigTemplate (a subclass of HScreenConfigTemplate) as an argument. This allows the user to construct a template and set some preferences for it (we’ll see how to do this in a few moments) and then see what configuration can be generated that is the best fit for those preferences, given the current configuration of the other screen devices. The variant on this method takes an array of HGraphicsConfigTemplate objects and returns the configuration that best fit all of them, but this is used less often. Once we have a suitable configuration, we can set it using the setGraphicsConfiguration() method.

As we’ve already seen, the HAVi API uses the HScreenConfigTemplate class to define a set of preferences for the configuration. These preferences have two parts – the preference itself, and a priority. The preferences are fairly self-explanatory, and valid preferences are defined by constant values in the HScreenConfigTemplate class and its subclasses. We’ll only mention three of these preferences here – the ZERO_GRAPHICS_IMPACT and ZERO_VIDEO_IMPACT preferences are used to specify that any configuration shouldn’t have an affect on already running graphical applications or on currently playing video; and the VIDEO_GRAPHICS_PIXEL_ALIGNED preference indicates whether the pixels in the video and graphics layers should be perfectly aligned (e.g. if the application wants to use pixel-perfect graphics overlays on to video).

The more interesting part is the priority that’s assigned to every preference. This can take one of the following values:

REQUIRED– this preference must be metPREFERRED– this preference should be met, but may be ignored if necessaryUNNECESSARY– the application has no preferred value for this preferencePREFERRED_NOT– this preference should not take the specified value, but may if necessaryREQUIRED_NOT– this preference must not take the specified value

This gives the application a reasonable degree of freedom in specifying the configuration it wants, while still allowing the receiver to be flexible in matching the desires of the application with those of other applications and the constraints imposed by other screen device configurations.

When the application calls the HGraphicsDevice.getBestConfiguration() method (or equivalent method on another screen device), the receiver checks the preferences specified in the HScreenConfigTemplate and attempts to find a configuration that matches all the preferences specified as REQUIRED and REQUIRED_NOT while meeting the constraints specified by other applications. If this can me done, the method returns a HGraphicsConfiguration object representing the new configuration, or NULL of the constraints can’t all be met.

This sounds horribly complex, but it’s not as bad as it seems at first. For each of the three device classes (HBackgroundDevice, HVideoDevice, and HGraphicsDevice), the configuration classes have analogous names (HBackgroundConfiguration, HVideoConfiguration, and HGraphicsConfiguration respectively) and so do the configuration templates (HBackgroundConfigTemplate, HVideoConfigTemplate, and HGraphicsConfigTemplate).

One thing that becomes apparent from this method of configuring the display devices of the is that the configuration may change as an application is running. Given that applications usually want to know when their display properties have changed, the HScreenDevice class allows applications to add themselves as listeners for HScreenConfigurationEvent events using the addScreenConfigurationListener() method. These events inform applications when the configuration of a device changes in a way that isn’t compatible with the template that’s specified as an argument to the addScreenConfigurationListener(). The application can use this to know when its display changes in a way that means it needs to change its display settings and react accordingly.

Something this complex deserves a code example, so here’s one that shows how to set the configuration on a graphics device:

// First, get the HSCreen. We'll just use the

// default HScreen since we probably only have

// one.

HScreen screen = HScreen.getDefaultHScreen();

// Now get the default HGraphicsDevice, again

// because we'll probably only have one of them

HGraphicsDevice device;

device = screen.getDefaultHGraphicsDevice();

// Create a new template for the graphics

// configuration and start setting preferences

HGraphicsConfigTemplate template;

template = new HGraphicsConfigTemplate();

// We prefer a configuration that supports image

// scaling

template.setPreference(

template.IMAGE_SCALING_SUPPORT,

template.PREFERRED);

// We also need a configuration that doesn't affect

// applications that are already running

template.setPreference(

template.ZERO_GRAPHICS_IMPACT,

template.REQUIRED);

// Now get a device configuration that matches our

// preferences

HGraphicsConfiguration configuration;

configuration = device.getBestConfiguration(template);

// Finally, we can actually set the configuration.

// However, before doing this, we need to check

// that our configuration is not null (to make sure

// that our preferences could actually be met).

if (configuration != null) {

try {

device.setConfiguration(configuration)

}

catch (Exception e) {

// We will ignore the exceptions for this

// example

}

}

Background Configuration Issues

The background layer in an MHP receiver can display either a solid colour or a still image. An application can choose which of these two options to use by setting the appropriate preference in the HBackgroundConfigTemplate when getting a configuration. If an application chooses the still image configuration, then the configuration that’s actually returned will be a subclass of HBackgroundConfiguration.

This subclass, HStillImageBackgroundConfiguration, adds two extra methods over the standard HBackgroundConfiguration class:

public void displayImage(HBackgroundImage image);

public void displayImage(HBackgroundImage image,

HScreenRectangle r);

Both of these methods take an HBackgroundImage as a parameter. HBackgroundImage is a class designed to handle images in the background layer, as its name suggests. Why do we need a separate class for this? After all, we’ve got a perfectly good java.awt.Image class.

The problem is that the background image isn’t part of the AWT component hierarchy, so the AWT Image class isn’t really suitable. The HBackgroundImage class is designed as a replacement that doesn’t have all the baggage of the AWT Image class, and only has methods that are needed for displaying background images.

public class HBackgroundImage

{

public HBackgroundImage(String filename);

public void load(HBackgroundImageListener l);

public void flush();

public int getHeight();

public int getWidth();

}

We won’t examine this class in too much detail because it’s not very complex, but at the same time you should at least know of its existence and how to use it. The HBackgroundImage class takes the filename of an MPEG I-frame image as an argument to the constructor, and the image must be loaded and disposed of explicitly, using the load() and flush() methods respectively. This has the big advantage of allowing the application absolute control over when the image (which may be quite large) is resident in memory.

Device Configuration Gotchas

As we’ve seen, setting the configuration of a display device is a pretty complex business, and there is a number of things that developers need to be aware of when they are developing applications.

The first of these is that the different shapes of video and graphics pixels may mean that the video and graphics are not perfectly aligned. Luckily this can be fixed by setting the VIDEO_GRAPHICS_PIXEL_ALIGNED preference in the configuration template, although there’s no guarantee that a receiver can actually support this. As if this wasn’t enough, there are other factors that can cause display problems. Overscan in the TV can mean that 5% of the display is off-screen, so developers should stick to the ‘safe’ area which does not include the 5% of the display near each of the screen edges. If you really want to put graphics over the entire display, be aware that some of them may not appear on the screen. More information about safe areas is given in the tutorial on designing for the TV.

Another problem is that what the receiver outputs may not be what’s actually displayed on the screen. Given that many modern TV sets allow the viewer to control the aspect ratio, the receiver may be producing a 4:3 signal that’s being displayed by the TV in 16:9 mode. Even then, there are various options for how the TV will actually adjust the display to the new aspect ratio. While there’s nothing that your application can do about this, because it won’t even know, it’s something that you as a developer may need to be aware of because even if the application isn’t broadcast as part of a 16:9 signal now, it may be in the future.

Finally, as we’ve seen earlier, the graphics, video and background configurations are not completely independent, and changing one may have an effect on the others. Applications that care about the graphics or video configuration should monitor them, to make sure that the configuration stays compatible with what the application is expecting, and to be able to adapt when the configuration does change.

Coordinate systems in MHP

The three display layers in MHP don’t just causes us problems with configuring them. MHP has three different coordinate systems, which are used to provide several different ways of positioning objects accurately on the screen. Typically, an MHP application that uses graphics to any great degree will need to be aware of all of them, even if it doesn’t use them all. Different APIs will have different support for the various coordinate systems.

Normalized coordinates

The normalized coordinate system has its origin in the top left corner of the screen, and has a maximum value of (1, 1) in the bottom right corner. This is an abstract coordinate system that allows the positioning of objects relative to each other without specifying absolute coordinates. Thus, an application can decide to place an object in the very centre of the screen without having to know the exact screen resolution.

Normalized coordinates are usually used by the HAVi classes. Especially, they are used by the HScreenPoint and HScreenRectangle classes.

Screen coordinates

The screen coordinate system also has its origin in the top left corner of the screen, and has its maximum X and Y coordinates in the bottom right of the screen. These maximum values are not fixed, and will vary with the display device. The MHP specification states that the maximum X and Y coordinates will be at least (720, 576), however.

Effectively, the screen coordinate space is defined by the HGraphicsDevice. Screen coordinates are used by HAVi for positioning HScenes and for configuring the resolution of HScreenDevice objects.

AWT coordinates

The AWT coordinate system is the standard system used by AWT (but you’d probably guessed that from the name). Like the screen co-ordinate system, it is pixel based, but instead of dealing with the entire screen, it deals with the root window for the application. The origin is in the top left corner of the AWT root container for the application (which as we shall see below is the HScene, and its maximum values are at the bottom right corner of that window. used by AWT.

Coordinate systems in the MHP graphics model.

This is a very rough guide to the coordinate systems used in MHP. If you are feeling very curious, the MHP specification describes the various coordinate systems and the relationship between them in more detail than is ever likely to be useful to you.

HScenes and HSceneTemplates

Since set-top boxes usually have such limited memory and CPU power, it’s not very likely that a set-top box will run a fully-fledged window manager. What this means for MHP developers is that you can’t use the java.awt.Frame class as a top-level window for applications, because this class is simply too dependent on a window manager to work without one.

Instead, we use the org.havi.ui.HScene class. This defines something conceptually similar to a Frame, but without all the extra baggage that a Frame has associated with it. It also has a few security limitations that the Frame class doesn’t. The biggest one of these is that an application cannot see more of the AWT hierarchy that its own HScene and any components in it. HScenes from other applications are not visible to it,and other applications can’t see the HScene belonging to this application. Thus, every application is almost totally isolated from the graphical activities of any other application. We’ll see what almost means in a moment.

How HScenes separate the various parts of the AWT display hierarchy.

In the diagram above, the application that owns the white components cannot manipulate the shaded components, since these belong to other applications. Indeed, the application won’t even know that these components exist.

One of the other differences between an HScene and a Frame is that an application may only create one HScene – any attempts to create an HScene before the current one is disposed of will fail. While this may seem strange at first, it makes sense if you remember that the receiver probably has no window manager – after all, how many applications need more than one top-level window?

The only case where an application is allowed to have more than one HScene is where the HScenes appear on different HScreens (i.e. on different display devices).

The HScene also lets us control the TV-specific functionality that a Frame wouldn’t do, such as the aspect ratio of the screen and issues related to blending and transparency between the graphics layer and the other layers of the display.

Given these differences, and the amount of information that we actually need to specify to set up an HScene the way we want it, we can’t simply create one in the same way that we’d create a Frame. Instead, we use the org.havi.ui.HSceneFactory class to create one for us. This allows the runtime system to make sure that the HScene we get is as close to our requirements as it could be, while at the same time meeting all the limitations of the platform. For instance, some platforms may not allow HScenes to overlap on screen. In this case, if you request an HScene that overlaps with an existing one, that request can’t be met and so your application will not get the HScene that it requested.

We tell the HSceneFactory what type of HScene we want using almost the same technique that was used for screen devices.

The HSceneTemplate class allows us to specify a set of constraints on our HScene, such as size, location on screen and a variety of other factors (see the interface below), and it also lets us specify how important those constraints are to us. For instance, we may need our HScene to be a certain size so that we can fit all our UI elements into it, but we may not care so much where on screen it appears. To handle this, each property on an HScene has a priority – either REQUIRED, PREFERRED or UNNECESSARY. This lets us specify the relative importance of the various properties.

public class HSceneTemplate extends Object {

// priorities

public static final int REQUIRED;

public static final int PREFERRED;

public static final int UNNECESSARY;

// possible preferences that can be set

public static final Dimension LARGEST_DIMENSION;

public static final int GRAPHICS_CONFIGURATION;

public static final int SCENE_PIXEL_RESOLUTION;

public static final int SCENE_PIXEL_RECTANGLE;

public static final int SCENE_SCREEN_RECTANGLE;

// methods to set and get priorities

public void setPreference(

int preference,

Object object,

int priority);

public Object getPreferenceObject(int preference);

public int getPreferencePriority(int preference);

}

Properties that are UNNECESSARY will be ignored when the HSceneFactory attempts to create the HScene, and PREFERRED properties may be ignored if that’s the only way to create an HScene that fits the other constraints. However, REQUIRED properties will never be ignored – if the HSceneFactory can’t create an HScene that meets all the REQUIRED properties specified in the template while still meeting all the other constraints that may exist, the calling application will not be given an HScene.

So now that we’ve seen the mechanism that we need to use to get an HScene, how do we actually do it?

The HSceneFactory.getBestScene() method takes an HSceneTemplate as an argument, and will return an HScene if the constraints in the HSceneTemplate can be met, or a null reference otherwise. Once you have the HScene, it can be treated just like any other AWT container class. The only real difference is that you have to explicitly dispose of an HScene when you’re done with it, so that any resources that it has can be freed up.

If we look at the HSceneFactory again in some more details, we can see that there are a few other methods that allow us to manipulate HScenes:

public class HSceneFactory extends Object{

public static HSceneFactory getInstance();

public HSceneTemplate getBestSceneTemplate(

HSceneTemplate hst);

public HScene getBestScene(HSceneTemplate hst);

public void dispose(HScene scene);

public HSceneTemplate resizeScene(

HScene hs,

HSceneTemplate hst)

throws java.lang.IllegalStateException;

public HScene getSelectedScene(

HGraphicsConfiguration selection[],

HScreenRectangle screenRectangle,

Dimension resolution);

public HScene getFullScreenScene(

HGraphicsDevice device,

Dimension resolution);

}

We’ve already seen the dispose() and getBestScene() methods, and some of the other methods are self-explanatory. The getBestSceneTemplate() method allows an application to ‘negotiate’ for available resources – the application calls this method with an HSceneTemplate that describes the HScene it would ideally like, and the HSceneTemplate that gets returned describes the closest match that could fit the input template.

Of course, since an MHP receiver can have more than one application running at the same time, there’s no guarantee that an HScene can be created that exactly matches this ‘best’ HSceneTemplate since another application may create an HScene or do something else that changes the graphics configuration in an incompatible way.

High-Level Graphics Issues

Now that we’ve seen the lower-level graphics issues we can discuss some of the higher level issues relating to graphics and user interfaces in MHP.

The first thing to cover is the image formats that are supported by MHP. MHP supports the following four image formats:

- GIF

- JPEG

- PNG

- MPEG I-frame

In addition to this, it supports both the DVB subtitle and DVB teletext formats for subtitles (yes, this is confusing. The image formats can be used with any java.awt.Image object, just like you’d expect.

To manipulate the subtitles, however, you need to use the org.davic.media. SubtitlingLanguageControl. This is a Java Media Framework control (see the section on JMF for information about JMF controls) that allows you to turn subtitles on or off, query their status and select the language of the subtitles. This is an optional control, however, so many receivers may not support it.

Colours and transparency in MHP

As I mentioned in the start of this section, one of the differences between Java graphics and TV signals is that Java graphics use the RGB colour system while TV signals use the YUV system. You’ll be pleased to hear that all conversion is handled within the MHP implementation, and that developers don’t need care about this.

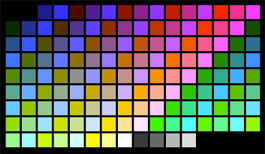

While most MHP receivers will support 24-bit color, not every receiver will do so. The MHP specification only requires receivers to support a 256-color CLUT for graphics, and so developers and designers should take care to ensure that their graphics look good in 256 colors as well as in 24-bit color. To complicate things even more, some of these 256 colours are reserved for subtitles and the on-screen display. This leaves 188 colours available, and MHP defines the color palette shown below for use in MHP applications:

The 188-color CLUT available to MHP applications.

Any colors that are not in the palette may be mapped to their nearest equivalent (or dithered) in some MHP receivers. The palette entries used by the subtitle decoder and/or the OSD can also be used by MHP applications, but these may vary between implementations.

Some aspects of the display are likely to be more immune to this problem than others, however. Background images using MPEG I-frames will almost always support 24-bit color.

There is one more colour-related issue that you should be aware of. Given that an MHP receiver is primarily a video-based device (and I just know that someone will probably disagree with this, but I’ll say it anyway), it’s generally useful for the graphics layer to be able to contain elements that are transparent through to the video layer so that the video can be seen through it. Since MHP is based on the Java 1.1 APIs, though, there is no default support in AWT for this.

MHP allows colours to be transparent to the video layer by using a mechanism similar to the java.awt.Color class in Java 2. The class org.dvb.ui.DvbColor adds an alpha value to the java.awt.Color class from JDK 1.1. While the alpha (transparency) level is an eight-bit value, only fully transparent, approximately 30% transparent and fully opaque must be supported by the receiver. Other levels may be supported however, but this can’t be guaranteed. If the application specifies a transparency value that is not supported, the actual value will be rounded up to the closest supported value, with two exceptions: a transparency value greater than 10% will not be rounded to 0% and a transparency value smaller than 90% will not be rounded up to 100%. Therefore, assuming that the receiver supports 0%, 30%, and 100% transparency levels, an application requesting a transparency level between 10% and 90% will always get a transparency level of 30% in practise.

The MHP Widget Set

Something else that we mentioned earlier is the fact that the AWT package in MHP has been cut down drastically from the desktop AWT version that developers are familiar with. The MHP version of AWT supports ‘lightweight’ components only (i.e. those with no peer classes), which rules out things like java.awt.Button, java.awt.Dialog and java.awt.Menu.

The HAVi Level 2 GUI that is used as part of MHP (the org.havi.ui package that we’ve already seen) provides a set of widgets that can be used instead of the missing AWT ones. The widgets provided by the HAVI API are the standard set that you’d expect to find in any GUI system:

- buttons, check-boxes and radio buttons

- icons

- scrollable lists

- dialog boxes (these are a subclass of

HContainer, the HAVi replacement for thejava.awt.Containerclass) - text entry fields

Of course, this is not a comprehensive list, and there are others. Check the org.havi.ui package for details of the entire widget set.

One of the problems that application designers have with any standard widget set, of course, is that it enforces a standard look and feel. While a standard look and feel is fine (and generally considered essential) for a PC application, on a TV it’s not so important or so useful. An a TV environment, the interaction model is usually simpler; also, the emphasis is less on efficiency and more on fun and differentiating your application from every other application (although this does not give iTV developers an excuse to design bad user interfaces).

To help content developers differentiate their applications from everyone else’s, the HAVi API allows the appearance of the widget set to be changed fairly easily. The org.havi.ui.HLook interface and its subclasses allow an application to override the paint() method for certain GUI classes without having to define new subclasses for the GUI elements that will get the new look. HLook objects can only be used with the org.havi.ui.HVisible class and its subclasses, but this still offers a fairly large selection of possibilities.

The HLook.showLook() method will get called by the paint() method of HVisible and its subclasses, so applications themselves do not need to call it. Effectively all the HLook method does is override those methods that are actually related to drawing the GUI element. An HLook instance is attached to an HVisible object using the HVisible.setLook() method. This takes an HLook object as an argument, and simply sets the HVisible to use that HLook object instead of using the internal implementation of the paint() method.

The most popular way for applications to provide their own look and feel so far, however, is for applications to simply provide their own widgets. It’s not clear whether this is because developers are still unfamiliar with the HAVi API set, or whether there is a real benefit to rolling your own widgets. The big advantage seems to be for those applications that don’t require complex widgets, but then using these complex widgets on a system where you may only have a TV remote control for user input is a potential usability nightmare. Navigating around complex user interfaces with just a remote control is a big problem, and can be a major cause of problems for applications such as email clients and T-commerce applications. Designers should be very careful to design an interface that is appropriate for the television, instead of designing a PC-style user interface.

Swing

The Java Foundation Classes (also known as Swing) are not supported by MHP. Support may get added in future version of the specification, but until the graphics hardware in digital TV receivers improves, there is not likely to be a big demand for it from network operators.